The basic idea about SLAM is that if I were a robot, and I know two fixed points: (x1, y1) and (x2, y2). I now can see point1, and the measurement tells me it is 5 m away from me. When I turn my head for 30 degrees, I can see point2, and the measurements tell me it is 10m away. As a result of knowing my relative position with respect to point1 and point2, I can localize myself.

But here comes the question. I have to look back to see point1 and point2 every time I move forward, to know where I am. This kind of looking back is very stupid. So, a smart PhD student comes up with a solution: as soon as I know where I am with respect to point1, point2, I add more points: point3, point4... because I know the coordinate of myself, I can measure the location of these points (mapping). I could use those points afterwards for localization.

Therefore, SLAM means knowing my localization and the coordinates of the fixed points (feature mapping). Because SLAM introduces noise no matter when measuring distance or calculating the rotation, traveled distance, SLAM requires Kalman filter.

MonoSLAM also refers to real time structure from motion. The fixed points are the visual features, and measuring distance becomes match feature and then triangulate.

MonoSLAM is implemented in MATLAB

here. The authors introduce MonoSLAM in the following way. SLAM in a 3D environment - Need of 3D landmarks - Camera based method - use of visual 2D interest points - camera gives 2D interest points - no depth information - how to get depth? - by using camera motion - How to estimate camera motion - by using 3D landmarks.

Therefore, 3D landmark = 2D interest point + depth.

At the beginning, a few initial landmarks must be provided for initialization, otherwise the camera motion cannot be estimated. After the initialization phase, an iterative process begins. Step 1: 2D interest points must evolve into 3D landmarks when the estimate of their depth is good enough. Step 2: 3D landmarks contribute to the estimation of the state, including camera and the map.

The camera and map state is estimated through an EKF. After initialization, this filter performs a model based prediction, compares it to the measurements (camera data), and thus applies a correction on the global state according to the innovation.

To use camera data, we have to know its resolution, focal length, CMOS sensor dimension, and thus we need to perform a calibration step, such as the MATLAB toolbox.

The landmarks could be initialized manually, or by using an object of known size at the beginning.

Since we use EKF, we need to have a model, and the only model we can have for the camera is a kinematic one, instead of a dynamic one. This model allows only constant velocity movement. However, the camera may not move in this way. Hence we have to add a noise to allow for changes in direction or speed. This is necessary for the EKF to assess the confidence into its estimates.

A common noise model is the additive noise model, where random gaussian noise is added on each state component. But here we use a non linear, acceleration based noise model. To enable several trajectories around the previous one, we consider the linear and angular accelerations to be given by random zero mean gaussian variables. The standard deviation of these variables allows us to tune how uneven the motion can be.

The prediction step randomly chooses one trajectory among these possibilities, and if necessary the correction step will bring the system back in tracks.

Landmarks are identified from interest points. The Shi-Tomasi detector is used to define salient points. The detection is based on local extrema of the gradient analysis of 15x15 pixels patches. The eigenvalues of the matrix are used to assess the quality of a potential interest point, through a threshold. When a new interest point is identified, it is attached to its neighborhood, namely its 15x15 patch, to describe this point. The patch is normalized to increase the detection robustness for illumination change.

Since there is no depth information from a single image, we need to use motion to recover the depth. This is done using particle filter. When an interest point is selected, we only know that this point belongs to a certain line called the epipolar line. To estimate where the point lies on the epipolar line, the line is uniformly sampled by a given number of particles, where each particle represents a depth hypothesis. A maximal depth hypothesis related to the size of the environment limits the search zone.

When an interest point has been registered, meaning that its patch and epipolar line are known, the algorithm tries to match it in the subsequent pictures. An interest point without any depth information is tracked using correlation over a window. The size of the window is constant, defied by a maximal acceleration in terms of pixels. Interest points moving out of the image or weakly matched are discarded.

During the tracking, after the correlation has matched an interest point, all the particles associated to this point are re-projected in the picture. Resampling is performed to promote hypothesis best-linked to the correlation result, and 25% of the particles are resampled uniformly to avoid early convergence. When the variance of the depth hypotheses shrinks below a threshold, the estimation is considered reliable. The interest point is then attached a depth and becomes a landmark, with a large uncertainty along the epipolar line to allow the EKF to modify its position easily if necessary.

Compared to the interest point management (initialization, tracking, depth estimation and upgrade as landmark), landmark tracking is much easier. The EKF prediction gives an estimate of the camera position and orientation, as well as uncertainties on these quantities and on the landmarks position. The position and orientation of the camera, relative to the landmarks position, allow us to predict how the landmarks will be projected onto the image plane, along with an uncertainty area. Both the uncertainty of the camera position and orientation and the uncertainty of landmark position are taken into account to compute this uncertainty area. The larger the uncertainty is, the wider the search area is in the new picture. The the search is simply performed by correlation within this area. The difference between the EKF prediction and the camera observation gives the innovation signal of the filter.

When the algorithm is running, at least one landmark must always be visible. Thus, as soon as the number of visible landmarks falls below a chosen number, a search procedure is launched, in order to identify new interest points. This is achieved by searching interest points in randomly drawn windows. The only constraint on these windows is they have to be far away from the currently tracked landmarks and interest points. Otherwise, no effort is spent in trying to pick up interest points in the most useful place for the algorithm.

The innovation signal is at the core of the EKF correction step, which affects the whole state vector. We previously explained how the innovation signal is obtained, without any details concerning the projection.

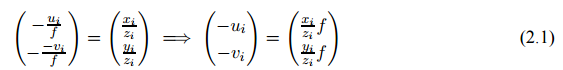

The simple pinhole camera model is used to perform reprojection. No distortion correction is applied here. For a landmark yi described by its coordinates in the world frame, we have to express its position relatively to the camera.

Correction is based on the innovation. A simple additive noise is finally added to the observation model, which enables us to tune the confidence of the EKF towards the observations. To increase the robustness of the correction algorithm, landmarks weakly matched (bad correlation results) or unmatched (not seen when tey are predicted to be seen) along several frames are removed from the map. EKF is very likely to be lost if the landmark association is bad.